iTalk2Learn has opened our eyes to new possibilities by highlighting the influence of emotion and speech in students’ learning.

One of core differentiators at Whizz Education (Whizz) is the ability to engage students in the learning process using diverse, interactive content. The ability to detect and respond to students’ affective states will make the Whizz platform even more personalised for each child.

Having established long-term relationships with many schools in the UK, Whizz Education has access to the human side of user experience. So far this year, Whizz has visited six primary schools in London to capture voice recordings for the speech recognition component of iTalk2Learn. The visits have given us an opportunity to promote interest in iTalk2Learn project amongst existing ‘Whizzers’.

The children we visited were excited about the project, which is not surprising, since the latest technology trends are generally of particular interest to them. Moreover, being a part of such a substantial project as developing Automatic Speech Recognition (ASR) technology made our students even more enthusiastic, and even proud, to get involved.

Talk and Learn

Even though it seems quite unusual to talk to one’s computer screen, it is interesting to see that most children do in fact speak when they do maths! Our main aim was to make them absolutely relaxed and indifferent to everything that was going on around them while they were studying.

After the first few minutes of confusion, students ceased to notice the presence of any adults, and were happy to comment and make calculations aloud using gestures such as counting on their fingers. More importantly, they expressed thoughts and feelings around the relative difficulty of each exercise and generally became more chatty and active.

In collaboration with the University of Hildesheim (UHi) and Birkbeck College (BBK), we have developed codes to measure students’ emotions and perceived difficulty during the voice recordings. One of the potential exploitations of the iTalk2Learn project is to enable the Maths-Whizz Tutor to interact with students within and between exercises by monitoring their speech and emotions. This, in turn, will allow us to replicate the behaviour of a human tutor more closely than ever before.

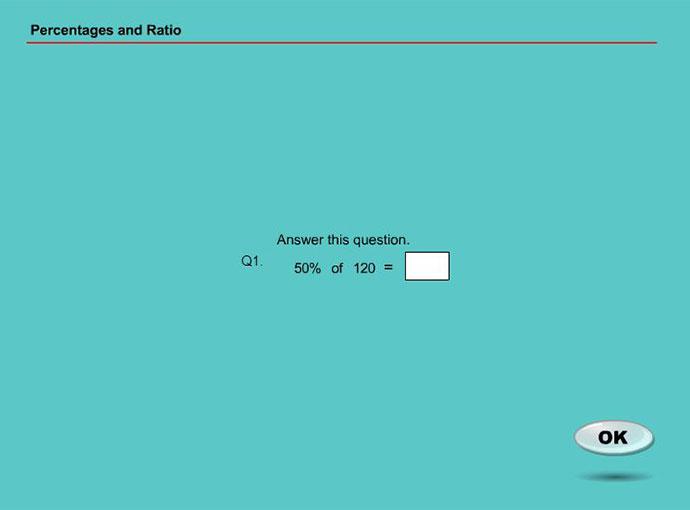

Exercise from Year 6 – Percentages.

In general, a virtual online tutor would accept the first question as successfully answered, ignoring how long it took to solve it, while a human tutor would notice the student’s doubts, and pick up any audio cues such as ‘not sure’, ‘maybe’ and ‘okay let’s try this’. The online tutor also allows students to proceed to the next level in the same topic, while a human tutor may assign them more questions at the same level to consolidate their knowledge.

Test from Year 6 – Percentages and Ratio.

It would be useful for virtual online tutors to be able to distinguish between students who answer a question with confidence, and those who express doubt. In this case, the iTalk2Learn platform will be able to substitute the role of a human tutor by observing any slight changes in behaviour and assigning the right task, in almost the same way as a human tutor would do. We say ‘almost’ because the research is still at a fairly early stage. The iTalk2Learn consortium is amongst the first seeking to ‘further the frontiers of online virtual tutoring’.

Certificate for Whizzers participated in iTalk2Learn project.

If you would like to get involved with voice recordings in the iTalk2Learn project, get in touch or comment on the post below!