iTalk2Learn partner, University of Hildesheim, organised a Workshop on Feedback from Multimodal Interactions in Learning Management Systems at the 7th International Conference on Educational Data Mining

A workshop on Feedback from Multimodal Interactions in Learning Management Systems took place at the 7th International Conference on Educational Data Mining in London on 4th July. The workshop was organised by iTalk2Learn partner, University of Hildesheim. The iTalk2Learn project was represented in this workshop by two different talks about emotion and affect recognition in intelligent tutoring systems.

Aims and Topics of the Workshop

Virtually all learning management systems and tutoring systems provide feedback to learners based on their time spent within the system, the number, intensity and type of tasks worked on and past performance with these tasks and corresponding skills. Some systems even use this information to steer the learning process by interventions such as recommending specific next tasks to work on, providing hints etc. Often the analysis of learner/system interactions is limited to these high-level interactions, and does not make good use of all the information available in much richer interaction types such as speech and video.

In the workshop, Feedback from Multimodal Interactions in Learning Management Systems, we wanted to bring together researchers and practitioners who are interested in developing data-driven feedback and intervention mechanisms based on rich, multimodal interactions of learners within learning management systems, and among learners providing mutual advice and help.

We aimed at discussing all stages of the process, starting from preprocessing raw sensor data, automatic recognition of affective states to learning to identify salient features in these interactions that provide useful cues to steer feedback and intervention strategies and leading to adaptive and personalized learning management systems. The contributions presented in this workshop range from work about affect recognition in intelligent tutoring systems to research questions from online learning and collaborative learning.

Talks at the Workshop

The workshop started with two talks about emotion and affect recognition in adaptive intelligent tutoring systems:

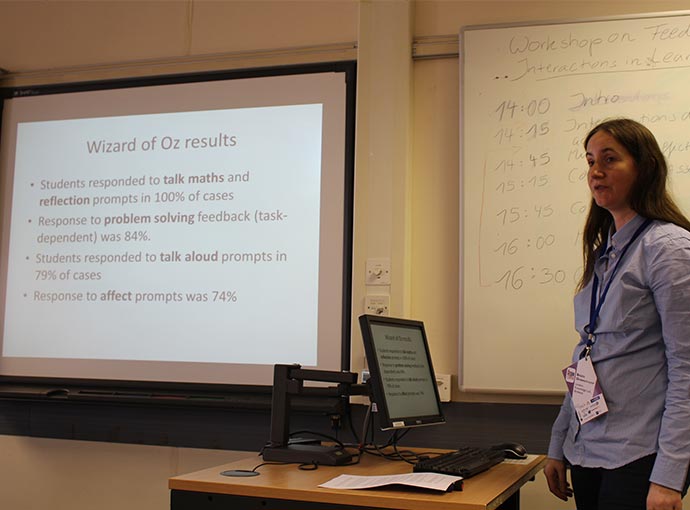

- Interventions During Student Multimodal Learning Activities: Which, and Why?, presented by Beate Grawemeyer, Manolis Mavrikis, Sergio Gutierrez-Santos and Alice Hansen

- Multimodal Affect Recognition for Adaptive Intelligent Tutoring Systems, presented by Ruth Janning, Carlotta Schatten and Lars Schmidt-Thieme

The paper Interventions During Student Multimodal Learning Activities: Which, and Why? [1] describes a Wizard-of-Oz study which investigates the potential of Automatic Speech Recognition together with an emotion detecor able to classify emotions from speech to support young children in their exploration and reflection whilst working with interactive learning environments.

Interventions During Student Multimodal Learning Activities: Which, and Why?

The task sequencing in traditional intelligent tutoring systems needs information gained from expert and domain knowledge. The paper Multimodal Affect Recognition for Adaptive Intelligent Tutoring Systems [2] aims to support a new efficient task sequencer, which only needs former performance information, by automatically gained multimodal input like for instance speech input from the students. For this purpose appropriate speech features are proposed and investgated in this work.

Multimodal Affect Recognition for Adaptive Intelligent Tutoring Systems

The second part of the workshop treated research questions from the field of collaborative learning and consisted of three talks:

- Collaborative Assessment, presented by Patricia Gutierrez, Nardine Osman and Carles Sierra

- Mining for Evidence of Collaborative Learning in Question & Answering Systems, presented by Johan Loeckx

- Creative Feedback: a manifesto for social learning, presented by Mark d’Inverno and Arthur Still

The paper Collaborative Assessment [3] introduces an automated assessment service for online learning support in the context of communities of learners. The goal is to introduce automatic tools to support the task of assessing massive number of students as needed in Massive Open Online Courses. The findings in the paper Mining for Evidence of Collaborative Learning in Question & Answering Systems [4] illustrate how the collaborative nature of feedback can be measured in online platforms, and how users can be identified that need to be encouraged to participate in collaborative activities.

In order to ground and motivate the definition and use of “creative feedback” the paper Creative Feedback: a Manifesto for Social Learning [5] takes a historical look at the two concepts of creativity/creative and feedback. The intention is to use this rich history to motivate both the choice of the two words, and the reason to bring them together.

Overall, the workshop was a full success with about 25 participants and very interesting and fruitful discussions about Multimodal Interactions in Learning Management Systems.

References:

[1] Grawemeyer, B., Mavrikis, M., Gutierrez-Santos, S. and Hansen, A. 2014. Interventions during student multimodal learning activities: which, and why? In Extended Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014).

[2] Janning, R., Schatten, C. and Schmidt-Thieme, L. 2014. Multimodal Affect Recognition for Adaptive Intelligent Tutoring Systems. In Extended Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014).

[3] Gutierrez, P., Osman, N. and Sierra, C. 2014. Collaborative Assessment. In Extended Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014).

[4] Loeckx, J. 2014. Mining for Evidence of Collaborative Learning in Question & Answering Systems. In Extended Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014).

[5] d’Inverno, M. and Still, A. 2014. Creative Feedback: a manifesto for social learning. In Extended Proceedings of the 7th International Conference on Educational Data Mining (EDM 2014).